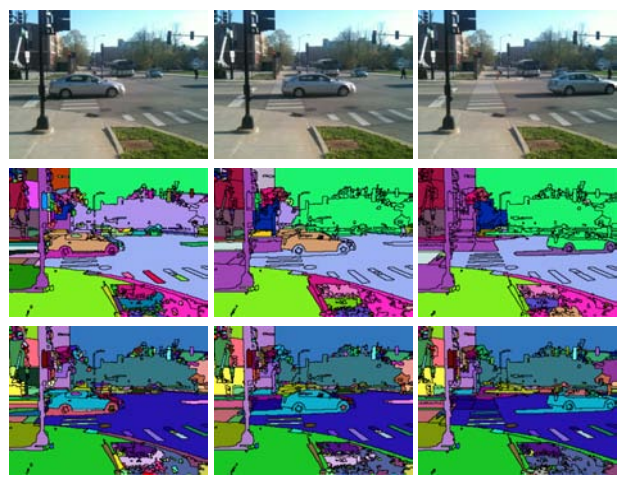

Unsupervised video segmentation is a challenging problem because it involves a large amount of data, and image segments undergo noisy variations in color, texture and motion with time. However, there are significant redundancies that can help disambiguate the effects of noise. To exploit these redundancies and obtain the most spatio-temporally consistent video segmentation, we formulate the problem as a consistent labeling problem by exploiting higher order image structure. A label stands for a specific moving segment. Each segment (or region) is treated as a random variable which is to be assigned a label. Regions assigned the same label comprise a 3D space-time segment, or a region tube. The labels can also be automatically created or terminated at any frame in the video sequence, to allow objects entering or leaving the scene. To formulate this problem, we use the CRF (conditional random field) model. Unlike conventional CRF which has only unary and binary potentials, we also use higher order potentials to favor label consistency among disconnected spatial and temporal segments.

- H.-T. Cheng, N. Ahuja, Exploiting nonlocal spatiotemporal structure for video segmentation, Computer Vision and Pattern Recognition (CVPR) 2012, Providence, RI, June 2012.