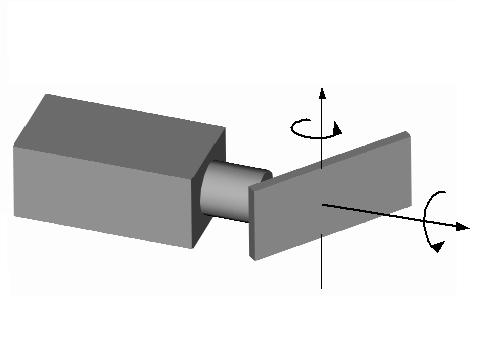

A visual depth sensor composed of a single camera and a transparent plate rotating about the optical axis in front of the camera. Depth is estimated from the disparities of scene points observed in multiple images acquired viewing through the rotating the plate.

We propose a novel depth sensing imaging system composed of a single camera along with a parallel planar plate rotating about the optical axis of the camera. Compared with conventional stereo systems, only one camera is utilized to capture stereo pairs, which can improve the accuracy of correspondence detection as is the case for any single camera stereo systems. The proposed system is able to capture multiple images by simply rotating the plate. With multiple stereo pairs, it is possible to obtain precise depth estimates, without encountering matching ambiguity problems, even for objects with low texture. Given the large number of resulting images, in conjunction with the estimated depth map, we show that the proposed system is also capable of acquiring super-resolution images. Finally, experimental results on reconstructing 3D structures and recovering high-resolution textures are presented.

Stereo is one of the most widely explored sources of scene depth. Stereo usually refers to spatial stereo, wherein two cameras, separated by a baseline, simultaneously capture stereo image pairs. The spatial disparity in the images of the same scene feature then captures the feature’s depth. More than two cameras can also be used to capture the disparity information across multiple views.

An alternative to such spatial stereo is temporal stereo wherein a single camera is relocated to the same set of viewpoints to capture the two or more images sequentially. This loses the parallel imaging capability and therefore the ability to handle fast moving objects, but it reduces the number of cameras used to one as well as eliminates the need for photometric calibration of the camera if needed for feature/intensity matching of stereo images.

We propose a single-camera depth estimation system that captures a large number of images, after the incoming light from a scene point has been deflected in a manner that depends on the object depth. The deflection mechanism is the passage of light through a thick glass plate placed in front of an ordinary camera at a certain orientation to the optical axis. In order to estimate depth, at least two images captured under two different plate poses are necessary. However, a larger number of images, containing redundant depth information, are acquired by changing the plate orientation sequentially, e.g. by rotating and/or reorienting the plate. Rotating the plate at a fixed orientation with respect to the optical axis is a mechanically convenient way of obtaining a large number of depth-coded images, followed, if desired, by more plate orientations and rotations, to acquire more images, as illustrated in Fig 1. An analysis of the correspondences among the set of images yields depth estimates. High quality, dense depth estimation distinguishes this new camera from other single camera stereo systems.

Depth Dependent Pixel Displacement

It is well known from optics that light ray passing through a planar plate will encounter a lateral displacement. For a camera-plate system, this phenomenon is shown as the pixel shifts in the image. For a given object point, we assume that the point is shifted by the plate in a plane parallel with image plane.

The displacements of a pixel depend differently on changes in the plate tilt angle and rotation angle. Using both changes leads to robust estimation because the lack of sensitivity to one type of change is complemented by higher sensitivity to the other. Depth estimation is done by minimizing a cost function which is overdetermined due to the large number of images available. The cost function includes the error due to the fit of the result to the multiple estimates available at a single pixel, and the local roughness of the surface at the pixel.

The dimensions of the plate and its tilt angle, among other parameters, have to be selected properly to achieve good depth estimates. The plate parameters affect the amount of depth-sensitive displacement in the image. Larger refractive index and thicker plate yield a larger displacement, which corresponds to a higher depth resolution. However, larger refractive index and thicker plate also introduce larger chromatic aberration, which may degrade the image quality. The tilt angle of the plate also affects the displacement – larger the tilt angle, larger the pixel displacement for the same depth. However, with the increase of the tilt angle, the size of the plate increases dramatically.

Prototype

We have developed a prototype with the rotating plate oriented at a fixed tilt angle. It requires calibration only once, in the beginning, after which the system acquires images continuously without the need for any further calibration. This is achieved by rotating the plate continuously about the x, y and/or z axes, acquiring images at video rate. The images of a scene point, acquired during rotation, lie along a 4-dimensional manifold in the space defined by the object depth in the viewing direction, and the three rotation angles. To estimate object depth in a given direction, we simply find the best estimate of the intersection of the line in that direction with the manifold samples obtained from the images acquired during rotation. Each pixel in each image thus contributes to the number of manifold samples. Since the locations of pixels in different images define a denser array of directions than possible with a single (orientation) image grid, the system yields depth estimates along a direction array denser than the original images.

We used a Sony DFX900 color camera equipped with a 16mm lens, and an ordinary glass plate with 13mm thickness and refractive index of around 1.5. The plate was mounted on a rotary stage and tilted approximately 45 degrees with respect to the image plane. A total 36 plate poses , evenly distributed within the range of 360 degrees, were calibrated and used to recover depth and synthesize the super-resolution images.

We tested our system with two objects: house and monster head. The experimental results of the house object are shown in Fig 2. Fig. 2a is one of the input images taken through the plate, Fig. 2b shows the recovered depth map, and Fig. 2c shows a new view generated from the reconstructed house model.

Fig 3 shows the experimental results for the monster head object. Figs 3a~3c show one of input images, the recovered depth map, and a new view of the reconstructed head model, respectively. This result indicates that the camera performs well for objects having a limited amount of texture.

- C. Gao and N. Ahuja, Single Camera Stereo Using Planar Parallel Plate, IEEE International Conference on Pattern Recognition, Cambridge, UK, August 2004, Vol. 4, 108-111.

- C. Gao and N. Ahuja, A Refractive Camera for Acquiring Stereo Depth and Super-Resolution Images, Proceedings IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New York, NY Vol. 2, June 2006, 2316-2323.